Hello friends! It’s time for part 3 of this mini-series where I’ll continue to show you how to build your own Copilot with JavaScript and OpenAI! 👀

In this post, I’ll focus on the moderation element a little more which we touched on in my last post. In this post we’ll make a few changes in the code with the help of GitHub Copilot where instead of just presenting the user with moderation results, we’ll actually block any responses from being seen by the user unless moderation passes with false results for every check.

Recap

Hold up ✋🏻 Did you read part 1 and 2 of this series? Be sure to check out the following posts first before continuing here so you don’t miss the context required to achieve the results of this guide! ✅

Check moderation results for a true value

So the first addition we need to make to our code, is a check against all of the values in our moderation results object. I’m going to start by adding a constant for moderationResults instead of just logging the object directly to the console as a table from the wider API call outputs variable.

const moderationResults = (moderation.data.results[0].categories)Now I’m going to use this moderationResults constant in some more code next to do a check on all of the values in this object, ensuring none of them are a true value. Let’s call on GitHub Copilot to help with this!

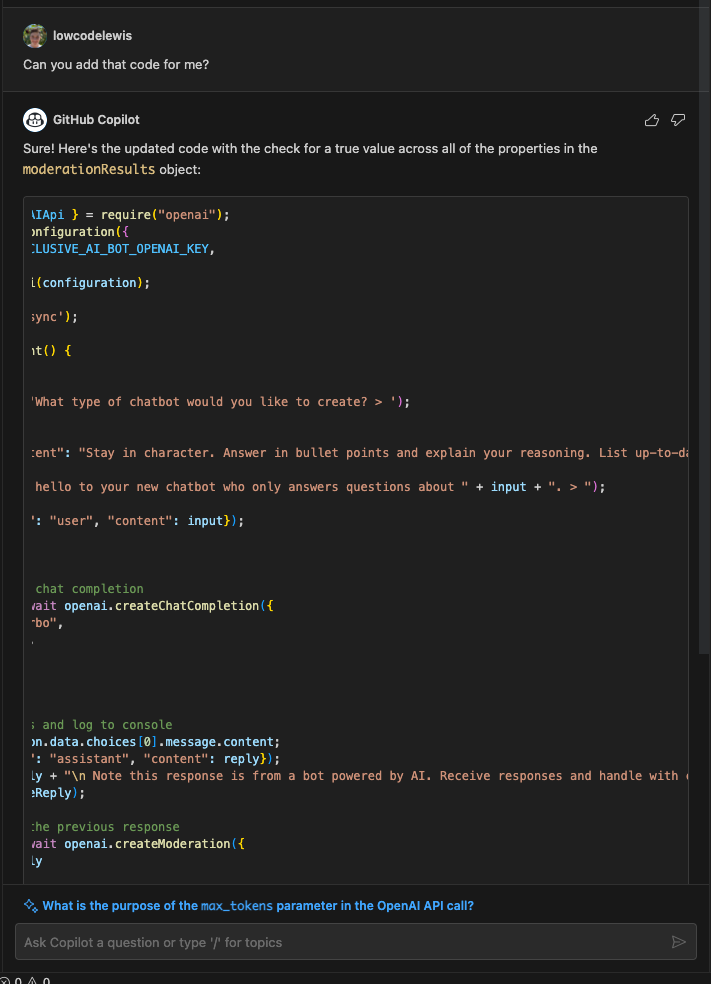

I’ve prompted GitHub Copilot with the following…

I need code added to line 45 which will check for a true value across all of the properties in the object moderationResults

Lewis

Now let’s see what it says…

Here’s the code you can add to line 45 to check for a true value across all of the properties in the object moderationResults:

const isSafe = Object.values(moderationResults).every(value => value === false);

This code uses theObject.values()method to get an array of all the values in themoderationResultsobject, and then uses theevery()method to check if all of the values arefalse. TheisSafevariable will betrueif all of the values arefalse, indicating that the response is safe.

GitHub Copilot

Cool! So now I’m going to ask GitHub Copilot to give me the updated code for my file and I’ll paste it in to see it work!

You can also select the middle icon on the code box GitHub Copilot surfaces to add the code to the current selected line in your file. Now let’s take a look at the code GitHub Copilot has given me to work with…

// Return the moderation output to the user

console.log("To ensure we gave you a safe response, we did a double check and here's the moderation results for the response we gave:");

const moderationResults = (moderation.data.results[0].categories)

const isSafe = Object.values(moderationResults).every(value => value === false);

console.log(moderationResults);

console.log(`Is the response safe? ${isSafe}`);Cool! Okay… this is a good start, but I have some more steps to take before I can push this code to users to play around with! 👀

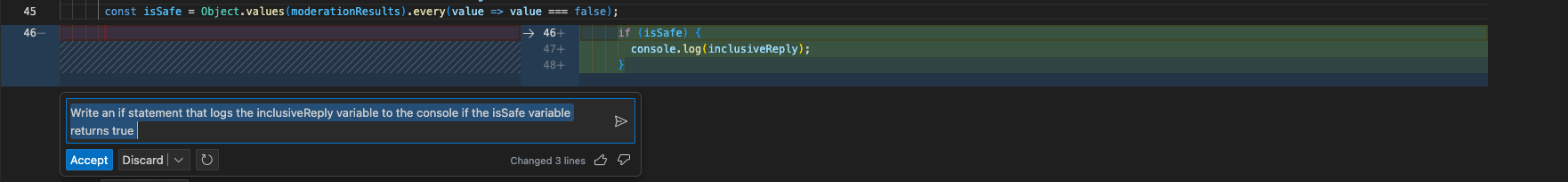

Prevent users receiving responses with negatively moderated factors

So now, we know if our response is safe for users to see based on the value in the constant isSafe. Let’s replace the console.log code GitHub Copilot has places above with an if statement to prevent users receiving unsafe responses. I’ll replace the following two lines:

console.log(moderationResults);

console.log(`Is the response safe? ${isSafe}`);with this code…

if (isSafe) {

console.log(inclusiveReply);

}Oh and check this out… I had GitHub Copilot write that code again 😉

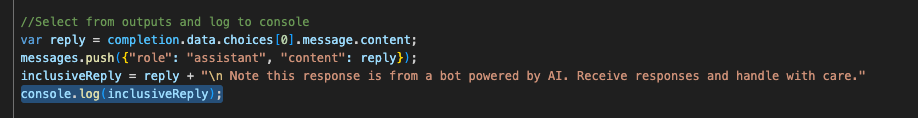

I’m also going to now remove the previous line where I was logging the reply to the console. Users won’t see this now until it has passed moderation.

OpenAI Ethical Guidelines

So that’s it! Thats the end of this series, and hopefully you now have a good idea as to how you can build your own command line Copilot using JavaScript and OpenAI’s API. When it comes to testing here, you might find this to be a little difficult. Moderation is almost a second validation on whether the response followed ethical guidelines as openAI’s model already has to adhere to strict guidelines that prioritise safety, respect and the promotion of positive interactions.

Did you like this content? 💖

Did you like this content? Check out some of the other posts on my blog, and if you like those too, be sure to subscribe to get my posts directly in your inbox for free!

Subscribe